The CEFR Reality Check

How accurate are online English placement tests?

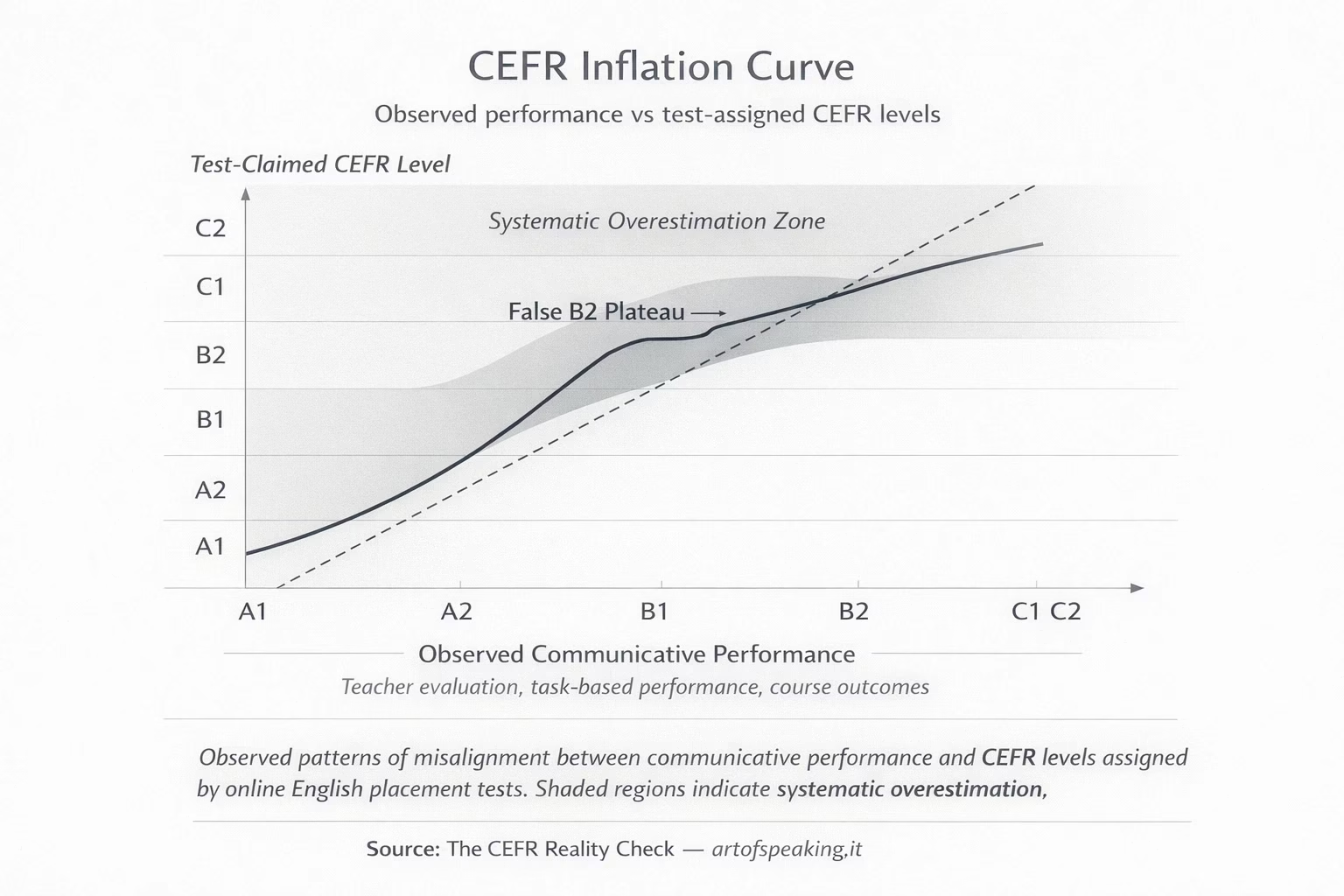

Observed patterns of misalignment between communicative performance and CEFR levels assigned by online placement tests. Source: The CEFR Reality Check — artofspeaking.it

How accurate are online English placement tests?

In contemporary English language education, CEFR levels such as B2 or C1 are widely used as shorthand indicators of proficiency. These labels carry significant weight in academic, professional, and training contexts, yet in online placement testing they are often treated as objective facts rather than as interpretive estimates derived from limited measurement.

This article examines CEFR placement test accuracy by analysing where, why, and under what conditions misalignment occurs between test-assigned CEFR levels and observable communicative performance. In this context, communicative performance refers to what learners can demonstrably do with language in realistic tasks, including speaking, writing, and interaction, not simply what they can recognise in isolated test items.

Rather than evaluating or ranking individual test providers, the analysis focuses on recurring structural patterns common to many online placement tests. The aim is not to discredit testing tools, but to clarify how CEFR labels are generated, where their limitations lie, and what minimal conditions are required for credible alignment with the CEFR framework.

Key Takeaways

- Online English placement tests frequently assign CEFR levels that exceed learners’ observable communicative performance.

- This misclassification is most visible around the B2 threshold, where diverse learner profiles are clustered under a single label: the False B2 Plateau.

- The misalignment is primarily structural, arising from limited skill sampling and reduced boundary sensitivity rather than individual learner error.

- Applying minimal, observable criteria helps interpret CEFR placement results more realistically and avoids over-reliance on test-assigned labels.

What CEFR Was Designed to Measure

The Common European Framework of Reference for Languages (CEFR) is a descriptive framework developed by the Council of Europe to support transparency and comparability in language proficiency. Its role as a reference framework, rather than a testing protocol, is outlined in the official CEFR documentation (Council of Europe, CEFR overview).

Definition: CEFR Inflation

CEFR inflation refers to a systematic pattern in which online placement tests assign CEFR levels that are higher than learners’ observable communicative performance would justify. The defining feature of inflation is not random error, but a consistent upward bias relative to what learners can demonstrably do with language in realistic communicative tasks.

The definition above describes what CEFR inflation is; the section below explains how this pattern becomes visible in practice.

The CEFR Inflation Effect

As proficiency increases, small measurement limitations compound. At lower levels, restricted skill sampling may still produce broadly acceptable classifications. At intermediate and upper-intermediate levels, however, reduced sensitivity at CEFR boundaries leads to category compression.

This compression is most visible around the B2 threshold, where learners with meaningfully different communicative profiles are frequently grouped under a single label: a phenomenon referred to here as the False B2 Plateau. This effect is not confined to one level, but reflects a broader structural tendency toward over-assignment as proficiency rises.

Why Misalignment Matters

At the learner level, over-placement often leads to frustration, reduced confidence, and slower progress. What appears as a motivational issue is frequently a measurement issue.

At the institutional level, inflated CEFR labels distort placement decisions, curriculum sequencing, and resource allocation. In professional and academic contexts, misalignment can result in mismatched expectations and avoidable communication breakdowns.

More broadly, systematic overestimation undermines the interpretive value of CEFR labels themselves. The issue is not the CEFR framework, but the tendency to conflate test output with communicative reality.

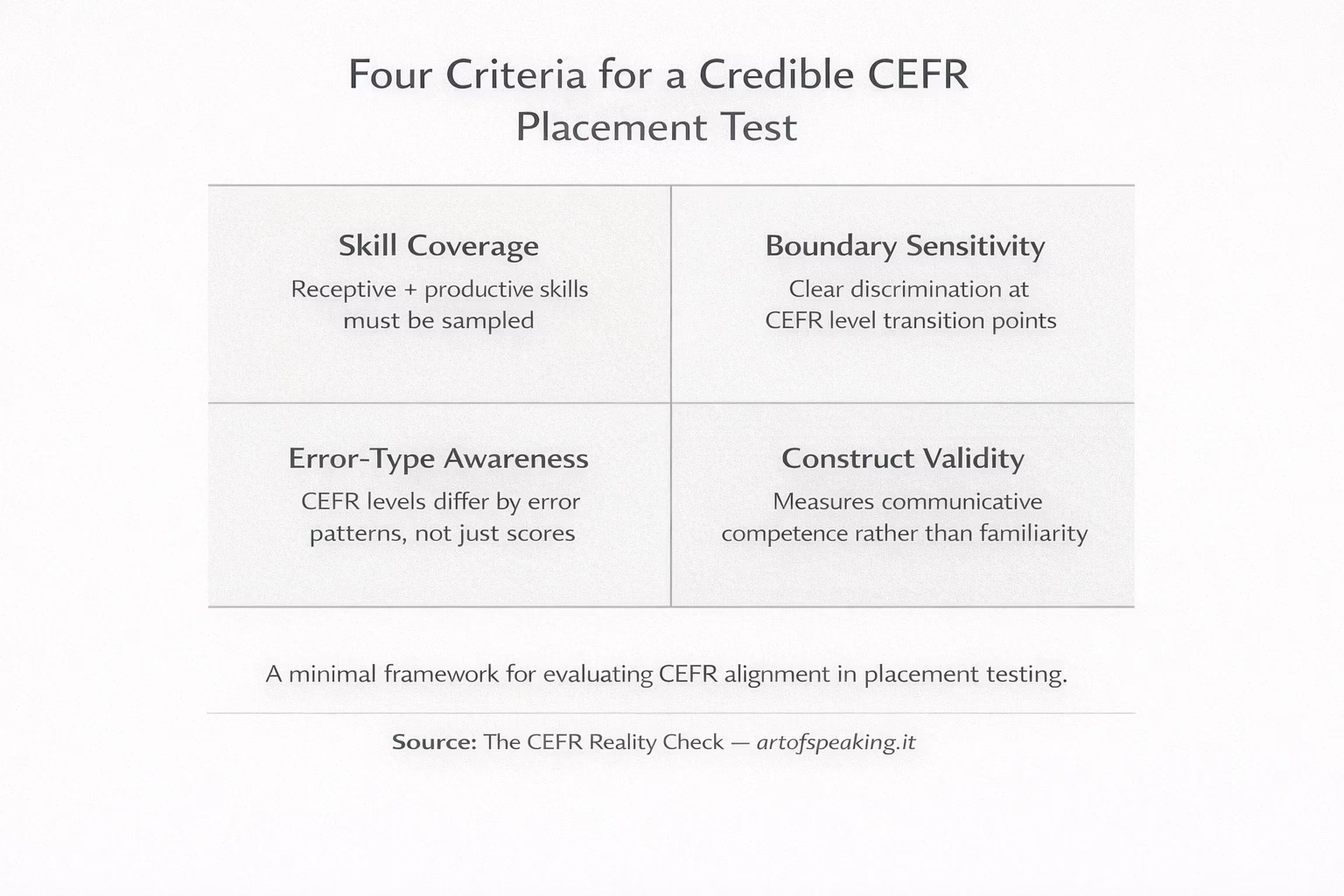

Four Criteria for a Credible CEFR Placement Test

A minimal framework for evaluating CEFR alignment in placement testing. Source: The CEFR Reality Check — artofspeaking.it

Note: This framework presents a minimal set of observable criteria for evaluating CEFR alignment in placement testing; it is not intended as an exhaustive validation protocol.

TL;DR

- Online placement tests are useful, but CEFR-labelled results require cautious interpretation.

- Systematic overestimation increases at higher proficiency levels.

- The False B2 Plateau reflects structural limits, not learner failure.

- Interpreting CEFR levels as indicative estimates preserves their meaning.

Press-Safe Summary

The CEFR Reality Check is an independent analysis examining how online English placement tests align with the Common European Framework of Reference for Languages (CEFR). Using observable communicative performance as a reference point, the analysis highlights recurring patterns in which automated tests assign CEFR levels that exceed learners’ functional ability, particularly around the B2 threshold. Rather than evaluating or ranking individual providers, the project proposes a minimal framework for interpreting CEFR-labelled results and aims to support more informed placement decisions by learners, educators, and institutions.

Journalist Q&A

- 1. What is the CEFR Reality Check?

- An independent analysis of how CEFR levels are assigned in online English placement tests and how those assignments compare with observable communicative performance.

- 2. Does this project evaluate or rank specific tests?

- No. It focuses on recurring structural patterns rather than individual providers or products.

- 3. What is meant by “CEFR inflation”?

- A systematic tendency for placement tests to assign higher CEFR levels than learners’ communicative performance would justify.

- 4. What is the False B2 Plateau?

- A pattern in which a wide range of mid-intermediate learners are clustered at B2 despite meaningful differences in fluency and control.

- 5. Is this a criticism of the CEFR framework itself?

- No. The analysis explicitly distinguishes between the CEFR as a reference framework and the way some placement tests operationalise it.

- 6. Why does misalignment occur most visibly at higher levels?

- Because higher CEFR levels require qualitative distinctions in productive skills that are difficult to infer from short, automated tests.

- 7. Are online placement tests therefore unreliable?

- They are useful for accessibility and initial orientation, but their results should be interpreted as indicative estimates rather than definitive classifications.

- 8. Who is this analysis intended for?

- Learners, educators, institutions, and decision-makers who rely on CEFR-labelled placement results.

- 9. What practical guidance does the article offer?

- A minimal framework outlining criteria that improve interpretation of CEFR placement outcomes.

- 10. What is the main takeaway?

- That CEFR levels retain their value only when grounded in observable communicative performance and interpreted with an understanding of what placement tests can—and cannot—measure.

Conclusion

Online English placement tests play an important role in accessibility and scalability, but their CEFR-labelled outputs must be interpreted carefully. The patterns described here show that misalignment is largely structural, arising from limited skill sampling and reduced boundary sensitivity rather than individual variability. Recognising CEFR inflation and related effects does not undermine the CEFR framework; it reinforces the need to preserve its interpretive integrity by grounding placement decisions in observable communicative performance.